Observing the developments on IT areas the last few years (no more than 10), one will conclude that the definition of ‘application' is rapidly changing. As the industry is moving from using an application-centric architecture to a Service Oriented Architecture (S.O.A.), the focus for building functionality is moving to Service Oriented Business Applications ( a nice description about that, except from the various posts in “evolving through …” you can find in SOBA more concentrated).

The above view, could provide the alternative to view applications as a set of services and components working together in fulfilling a certain business need. The technology, specifications, and standards for specifying these components and services may vary, and the choice is up to you, and of coarse your environment as a whole.

In various post here, I propose and expose from time to time this kind of approach, creating therefore any needed components and services in a model driven way and always having in mind an S.O.A. extension. However, even in that case you'll need some kind of programming model that defines interfaces, implementations, and references. The pros of this alternative is that when building an application or solution based on services, these services can be both created specifically for the application and reused from existing systems and applications. The discussions behind these, is known as Service Component Architecture (SCA), meaning the programming model that specifies how to create and implement services, how to reuse them and how to assemble or compose services into solutions.

The reason for this post, was an interesting project that an involved-in friend, asked for my opinion. The project is the AspireRFID an (one of the very-very few) RFID implementations that run over the Open Source community and therefore a candidate new niche for that domain. Although the existing approach is very interesting and very well analysed, I couldn't stop myself on telling that a more Service Oriented approach should be taken. This alternative might seem a little obscure, from the point of view that the technicalities and area of RFIDs might relate with a different business model than the well known explored ‘B’ in the SOBA. Actually, this is not the case. And the reason is that this area is promising candidate for that ‘B’ because the limited relations toy ERPs, CRMs or what ever, are the until know the well defined areas and developments of the various alternatives of open source that could be used on (aka OSGi, ServiceMix, FUSE, Camel, Newton, Jini, Tuscany etc). So, in that case the solution might not be a S.O.A. a S.O.A. towards one.

who’s and why’s of Service Component Architecture (SCA)

As has been stated in here and here, mentioning of an alternative is a crucial concern when there is the involvement of the meaning that one gives to S.O.A.. The SCA models the "A" in S.O.A. (and therefore not SOA). It provides a model for service construction, service assembly, and deployment. The SCA supports components defined in multiple languages, deployed in multiple container technologies, and with multiple service access methods. This means that with the SCA approach, we have a set of specifications, addressing both runtime vendors and enterprise developers/architects, for building service-oriented enterprise applications.

The SCA specifications try to define a model for building Service-Oriented (Business) Applications (SOBA). These specifications are based on the following design principles:

- Independent of programming language or underlying implementation.

- Independent of host container technology.

- Loose coupling between components.

- Metadata vendor-independence.

- Security, transaction and reliability modelled as policies.

- Recursive composition.

The first draft of SCA was published in November 2005 by the Open SOA Collaboration (OSOA). OSOA is a consortium of vendors and companies interest in supporting or building a SOA. Among them are IBM, Oracle, SAP, Sun, and Tibco. In March 2007 the 1.0 final set of specifications was released. In July 2007 the specifications were adopted by OASIS and they are developed further in the OASIS Open Composite Services Architecture (Open CSA) member section.

Concluding - Current SCA Specifications at OpenCSA

The current set of SCA specifications hosted on the OSOA site includes,

SCA Assembly Model

SCA Policy Framework

SCA Java Client & Implementation

SCA BPEL Client & Implementation

SCA Spring Client & Implementation

SCA C++ Client & Implementation

SCA Web Services Binding

SCA JMS Binding

The important element within the SCA approach, is that for the first time we have a set of specifications that address how S.O.A. can be built rather than what S.O.A. is about. Lets have a bird's eye view of SCA as a technology.

The four main elements of the SCA, aka the related specifications to start with

The set of specifications can be split into four main elements: the assembly model specification, component implementation specifications, binding specifications, and the policy framework specification. Therefore, these might be a good starting/guiding point upon the need of analysis and/or re-engineer needed for the project or the solution-application at hand.

Assembly model specification: this model defines how to specify the structure of a composite application. It defines what services are assembled into a SOBA and with which components these services are implemented. Each component can be a composite itself and be implemented by combining the services of one of more other components (i.e. recursive composition). So, in short, the assembly model specifies the components, the interfaces (services) for each component, and the references between components, in a unified, language independent-way. The assembly model is defined with XML files.

- SCA Assembly Model V1.00 (PDF)

- SCA Core XML Schema V1.0 (XSD file)

Using the SCA assembly model, we have to define firstly the recursive assembly of components into services. Recursive assembly allows fine-grained components to be wired into what in SCA terms is called composites. These composites than can be used as components in higher-level composites and applications. The type of a component generally defines the services exposed by the component, properties that can be configured on the components and dependencies the component has on services exposed by other components.

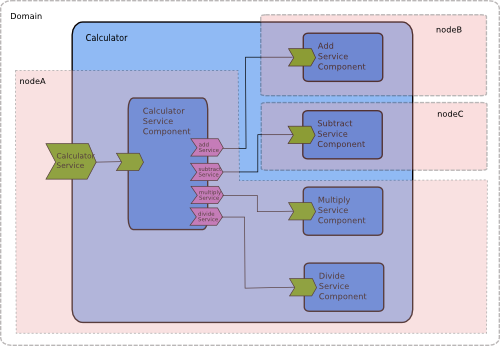

An example of an SCA assembly model, is shown in the diagram bellow:

Diagram 1: SCA assembly model

In the diagram above Composite X is composed of two components Component Y and Component Z, which themselves are composites. Composite X exposes its service using web services.

Composite Y is composed of a Java component, a Groovy component and a Jython component. The service offered by Java component is promoted outside the composite. The Java component depends on services offered by the Groovy and Jython components. In SCA terminology these dependencies are called references. All the references for the Java component are satisfied by services offered by other components within the same composite. However, the reference on the Groovy component has been promoted outside the composite and will have to be satisfied in a context within which Composite Y is used as a component. In the diagram above this is achieved using the service promoted by Composite Z.

Composite Z is composed of a Java component, a BPEL component and a Spring component, the service offered by the BPEL component is promoted to be used when the composite is used as a component in a higher level composite.

As you can see SCA allows building of compositional service-oriented applications using components built on heterogeneous technologies and platforms. SCA assembly model is generally expressed in an XML based language called Service Component Description Language (SCDL).

Component implementation specifications: these specifications define how a component is actually written in a particular programming language. Components are the main building blocks for an application build using SCA. A component is a running instance of an implementation that has been appropriately configured. The implementation provides the actual function of the component and can be defined with a Java class, BPEL process, Spring bean, and C++ or C code. Several containers implementing the SCA standard (meaning that they can run SCA components) support additional implementation types like .Net, OSGI bundles, etc. In theory a component can be implemented with any technology, as long as it relies on a common set of abstractions, e.g. services, references, properties, and bindings.

The following component implementation technologies are currently described:

- SCA Java Component Implementation V1.00 (PDF), XSD file

- SCA Spring Component Implementation V1.00 (PDF)

- SCA BPEL Client and Implementation V1.00 (PDF)

- SCA C++ Client and Implementation V1.00 (PDF), XSD file

- SCA COBOL Client and Implementation V1.00 (PDF)

- SCA C Client and Implementation V1.00 (PDF)

Binding specifications: these specifications define how the services published by a component can be accessed. Binding types can be configured for both external systems and internal wires between components. The current binding types described by OSOA are bindings using SOAP (web services binding), JMS, EJB, and JCA. Several containers implementing the SCA standard support additional binding types like RMI, Atom, JSON, etc. An SCA runtime should at least implement the SCA service and web service binding types. The SCA service binding type should only be used for communication between composites and components within an SCA domain. The way in which this binding type is implemented is not defined by the SCA specification and it can be implemented in different ways by different SCA runtimes. It is not intended to be interoperable. For interoperability the standardized binding types like web services have to be used.

Officially described binding types:

- SCA Web Services Binding V1.00 (PDF), XSD file

- SCA JMS Binding V1.00 (PDF), XSD file

- SCA EJB Session Bean Binding V1.00 (PDF), XSD file

- SCA JCA Binding V1.00 (PDF)

Policy framework specification: for describing how to add non-functional requirements to services. Two kinds of policies exist: interaction and implementation policies. Interaction policies affect the contract between a service requestor and a service provider. Examples of such policies are message protection, authentication, and reliable messaging. Implementation policies affect the contract between a component and its container. Examples of such policies are authorization and transaction strategies.

Summary

SCA provides a set of specifications, addressing both runtime vendors and enterprise developers/architects, for building service-oriented enterprise applications. For the first time we have a set of specifications that address how SOA can be built rather than what SOA is about. I have provided a bird's eye view of SCA as a technology.

The related concepts in detail

As stated before the main building block of a Service-Oriented Business Application is the component. Figure 1 exhibits the elements of a component. A component consists of a configured piece of implementation providing some business function. An implementation can be specified in any technology, including other SCA composites. The business function of a component is published as a service. The implementation can have dependencies on the services of other components, we call these dependencies references. Implementations can have properties which are set by the component (i.e. they are set in the XML configuration of a component).

Figure 1 - SCA Component diagram (taken from the SCA Assembly Model specification)

Components can be combined into assemblies, thereby forming a business solution. So from this point we get the meaning of ‘B’ for in what Business may refer to. The SCA calls these assemblies composites. As shown in figure 2 a composite consists of multiple components connected by wires. Wires connect a reference and a service and specify a binding type for this connection. Services of components can be promoted, i.e. they can be defined as a service of the composite. The same holds for references. So, in principle a composite is a component implemented with other components and wires. As stated before, components can in their turn be implemented with composites thereby providing a way for a hierarchical construction of a business solution, where high-level services (often indicated as composite services) are implemented internally by sets of lower-level services.

Figure 2 - SCA Composite diagram (taken from the SCA Assembly Model specification)

The service (or interface if you like) of a component can be specified with a Java interface or a WSDL PortType. Such a service description specifies what operations can called on the composite, including their parameters and return types. For each service the method of access can be defined. As seen before, the SCA calls this a binding type.

Figure 3 - Example XML structure defining a composite

Figure 3 exhibits an example XML structure defining an SCA composite. It's not completely filled in, but it gives a rough idea what the configuration looks like. The implementation tag of a component can be configured based on the chosen implementation technology, e.g. Java, BPEL, etc. In case of Java the implementation tag defines the Java class implementing the component.

Deployment and the Service Component Architecture contributions, clouds, and nodes

SCA composites are deployed within an SCA domain. An SCA Domain (as shown in Figure 4) represents a set of services providing an area of business functionality that is controlled by a single organization. A single SCA domain defines the boundary of visibility for all SCA mechanisms. For example, SCA service bindings (recall the earlier explained SCA binding types) do only work within a single SCA domain. Connections to services outside the domain must use the standardized binding types like web service technology. The SCA policy definitions do also only work within the context of a single domain. In general, external clients of a service that is developed and deployed using SCA should not be able to tell that SCA was used to implement the service, it is an implementation detail.

Figure 4 - SCA Domain diagram (taken from the SCA Assembly Model specification)

An SCA domain is usually configured using XML files. However, an SCA runtime may also allow the dynamic modification of the configuration at runtime.

An SCA domain may require a large number of different artifacts in order to work. In general, these artifacts consists of the XML configuration of the composites, components, wires, services, and references. We of course also need the implementations of the components specified in all kinds of technologies (e.g. Java, C++, BPEL, etc.). To bundle these artifacts the SCA defines an interoperable packaging format for contributions (ZIP). SCA runtimes may also support other packaging formats like JAR, EAR, WAR, OSGi bundles, etc. Each contribution at least complies to the following characteristics:

- It must be possible to present the artifacts of the packaging to SCA as a hierarchy of resources based off of a single root.

- A directory resource should exist at the root of the hierarchy named META-INF.

- A document should exist directly under the META-INF directory named ‘sca-contribution.xml' which lists the SCA Composites within the contribution that are runnable.

A goal of the SCA approach to deployment is that the contents of a contribution should not need to be modified in order to install and use the contents of the contribution in a domain.

An SCA domain can be distributed over a series of interconnected runtime nodes, i.e. it is possible to define a distributed SCA domain. This enables the creation of a cloud consisting of different nodes each running a contribution within the same SCA domain. Nodes can run on separate physical systems. Composites running on nodes can dynamically connect to other composites based on the composite name instead of its location (no matter in which node the other composite lives), because they all run in the same domain. This also allows for dynamic scaling, load balancing, etc. Figure 5 shows an example distributed SCA domain, running on three different nodes. In reality you don't need different nodes (and even different components) for this example, but it makes the idea clear.

Figure 5 - A distributed SCA domain running on multiple nodes (taken from the Tuscany documentation)

Implementations of the Service Component Architecture

The SCA is broadly supported with both commercial and open source implementations.

Open source implementations:

- Apache Tuscany (official reference implementation). The current version is 1.4, released in January 2009. They are also working on a 2.0 version which they aim to run in an OSGi enabled environment.

- The Newton Project.

- Fabric3. A platform for developing, assembling and managing distributed applications on SCA standards.Fabric3 leverages SCA to provide a standard, simplified programming model for creating services and assembling them into applications.

Commercial implementations:

Commercial implementations:

- ActiveMatrix Service Grid from TIBCO.

- Oracle Tuxedo.

- IBM WebSphere Application Server Feature Pack for SCA.

Concluding…

Concluding with the analysis of this kind of alternative, concerning the SCA in combination with the overall and therefore holistic Reference Architecture for S.O.A., we have expose a step toward for a complete Architectural Design and a Guide to implement the proper scheme concerning the case that should be applicable in the case at hand. Both the concerns covered in the Reference Architecture approach and the SCA approach here, in hand with the strategic decisions that the organisation or the project has in ‘mind’ would be able to provide specific specifications (in the technical, business and conceptual analysis) about the meanings and approaches that the approach is aiming to take. The definition of ‘B’s and ‘A’s is therefore the mission of these approaches and the clearance of the meaning for the entities involved from a context based view.

So, as far as concerning the S.O.A. ‘infects’ that have to do with the proper definition of Architecture, we may have a logical solution from divide and conquer. Having as a next step after the Reference Architecture’s decisions, the Service Component Architecture (SCA) which provides the means for analysing and decomposing the Service Oriented Business Applications (SOBA) involved, we have a clearer decomposition and definition of the ‘B’ (– business meaning in case at hand) from the ‘A’ (– of the whole architecture and organisation or project).