Client-side WSDL processing with Groovy and Gant

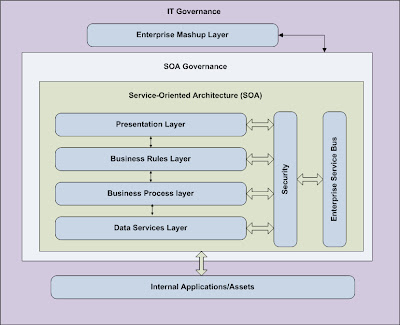

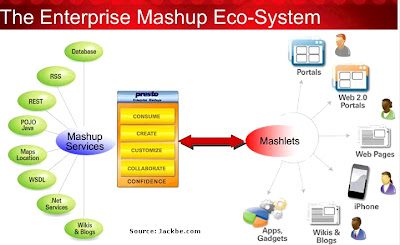

Like it or not, service-oriented architecture (SOA) is a hot topic, and SOAP-based Web services have emerged as the most common implementation of SOA. But, as happens with all new-comings, "SOA reality brings SOA problems." You can mitigate these problems by creating useful Web service clients, and also by thoroughly testing your Web services on both the server side and the client side. WSDL files play a central role in both of these activities, so in this post i will extent the client autogeneration approach and dynamic client for a web service going into an alternative (and many time quicker) path, using an extensible toolset that facilitates client-side WSDL processing using Gant and Groovy.

The real life needs-requirements emerging …

The real SOA-wise for Web services, as real life (and furthermore mature SOA standards) demand, should be interoperability. Although, in various projects the sensitivity and depth of the term interoperability should be defined well, in those projects always, there should be a cross-platform Web service testing team responsible for testing:

- functional aspects as well as the

- performance,

- load, and

- robustness

of Web services. In the ‘open sea’ of open source and legacy related applications, with mixture of standards and a batch of tools available around for various jobs and tasks, I realized the need for a….

- small,

- easy-to-use,

- command-line-based solution

for WSDL processing. I wanted the toolset to help testers and developers check and validate WSDL 1.1 files coming from different sources for compatibility with various Web service frameworks, as well as generating test stubs in Java to make actual calls. For the Java platform, that meant using Java 6 wsimport, Axis2, XFire, and CXF.

Searching for solution…

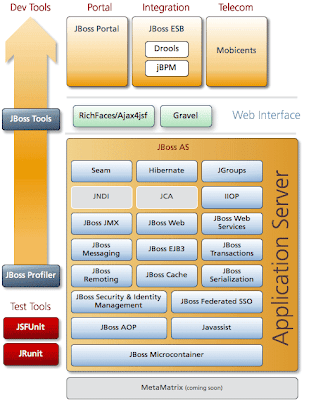

I’ve started client-side test development with XFire, but then switched to Axis2 because of changing customer requirements in our agile project. (Axis2 was considered to be more popular and widespread than XFire.) I have also used ksoap2 -- a lightweight Web service framework especially for the Java ME developer. We didn't expand the toolset to use ksoap2 because it has no WSDL-to-Java code generator.

Besides being controllable via simple commands, the toolset had to be able to integrate at least the WSDL checker into an automated build environment like Ant. One solution would have been to develop everything as a set of Ant targets. But executing everything with Ant is cumbersome when tasks become more complex, and you need control structures like if-then-else or for loops.

Even using ant-contrib binds you to XML-structures that are not easy to read, although you will have more functionality available. Anyhow, in the end you might need to implement some jobs as Ant tasks.

solution’s profile, overview:

All of this is possible, of course, but I was looking for a more elegant solution. Finally, I decided to use Groovy and a smart combination of Groovy plus Ant, called Gant. The components I have developed for the resulting Toolset can be divided into two groups:

- The Gant part is responsible for providing some "targets" for the tester's everyday work, including the WSDL-checker and a Java parser/modifier component.

- The WSDL-checker part is implemented with Groovy, but callable inside an Ant environment (via Groovy's Ant task) as part of the daily build process

That is an overview of the programming and scripting languages I used to build the Groovy and Gant Toolset. Now let's consider the technologies in detail.

Overview of Ant, Groovy, and Gant

Apache Ant is a software tool for automating software build processes. It is similar to make but is written in the Java language, requires the Java platform, and is best suited to building Java projects. Ant is based on an XML description of targets and their dependencies. Targets include tasks that every project needs, like clean, compile, javadoc, and jar. Ant is the de-facto standard build tool for Java, although Maven is making inroads.

Groovy is an object-oriented programming and scripting language for the Java platform, with features like those of Perl, Ruby, and Python. The nice thing is that, Groovy sources are dynamically compiled to Java bytecode that works seamlessly with your own Java code or third-party libraries. By means of the Groovy compiler, you can also produce bytecode for other Java projects. It is fair to say that I am biased towards Groovy compared to other scripting languages such as Perl or Ruby. While other people's preferences and experiences may be different from mine, the integration between Groovy and Java code is thorough and smooth. It was also easy, coming from Java, to get familiar with the Groovy syntax. What made Groovy especially interesting for solving my problems was its integration with Ant, via AntBuilder.

Gant (shorthand for Groovy plus Ant) is a build tool and Groovy module. It is used for scripting Ant tasks using Groovy instead of XML to specify the build logic. A Gant build specification is just a Groovy script and so -- to quote Gant author Russel Winder -- can deliver "all the power of Groovy to bear directly, something not possible with Ant scripts." While it might be considered a competitor to Ant, Gant relies on Ant tasks to actually do things. Really it is an alternative way of doing builds using Ant, but using a programming language, instead of XML, to specify the build rules. Consider using Gant if your Ant XML file is becoming too complex, or if you need the features and control structures of a scripting language that cannot be easily expressed using the Ant syntax.

What you see is what you get -- toolset contents and prerequisites

All the code above for the Groovy and Gant Toolset has been tested on a Windows XP and Windows 2003 Server OS with Java 5 and Java 6 using Eclipse 3.2.2 . In order to play with the toolset in its entirety, you will need to install Java 6, Axis2-1.3, XFire-1.2.6, CXF-2.0.2, Groovy-1.0, and Gant-0.3.1. But you can configure the toolset using a normal property file and exclude WSDL checks for frameworks you are not interested in, including Java 6's wsimport.

Installing the frameworks is simple: just extract their corresponding archive files. I assume you have either Java 5 or Java 6 running on your machine. You can use the Java 6 wsimport WSDL checker even with Java 5; you just need Java 6's rt.jar and tools.jar as an add-on in a directory of your choice. Installing Groovy and Gant usually takes a matter of minutes. If you follow my advice of placing Groovy add-ons -- including the gant-0.3.1.jar file -- in your <user.home>/.groovy/lib directory you do not even have to touch your original Groovy installation.

After the installation steps, you have to keep in mind the following artifacts that have been used or modified accordingly for my environment:

build.gant is the Gant script that contains Gant targets with Groovy syntax. build.properties is needed to customize the Gant script and the Groovy WSDL checker. - A set of jar files (including the Gant module) to be placed in your

<user.home>/.groovy/lib directory to enrich Groovy for the toolset. - An Eclipse Java project called JavaParser that is used to scan the generated Axis2 stub in order to modify it for use with HTTPS, chunking, etc. Two

SSLProtocolFactory classes are included with this distribution for use with Axis2 and Java 6 (or ksoap2). They will be helpful when it comes to cipher suite handling. - An Eclipse Groovy project called WSDL-Checker that contains Groovy classes that check WSDL files by calling Web service framework code generators and analyzing the output files. WSDL-Checker also validates WSDL files using the CXF validator tool (if you have CXF installed and enabled). This project was created using the Eclipse-Groovy-Plugin.

- A small Ant script that demonstrates how to call the Groovy WSDL checker from Ant as well as how to handle the checker's response.

- A directory with sample WSDL files.

- An Eclipse project to call a public Web service (GlobalWeather) provided as a JUnit test; it takes a generated Axis2 stub that has been modified by JavaParser (one JUnit test with Axis2 data binding "

adb" and one with "xmlbeans"). - An Eclipse workspace preferences file (

Groovy_Gant.epf) to assist in setting all the needed Eclipse classpath variables and user libraries. - Two Groovy scripts that call public Web services by means of the Groovy SOAP extension -- just so you can see what Groovy has to offer in this area

Once you've set up your environment you'll be ready to begin familiarizing yourself with the Groovy and Gant Toolset. For those who need it, here is a quick primer on the client side of Web services, which you will need to understand in order to follow the discussion in the remainder of the article.

The client side of Web services

A WSDL file incorporates all the information that is needed to create a Web service client. In order to create the client, a Web service framework's code generator reads the WSDL file. Based on the definitions found in the WSDL file it creates a Java stub or proxy class that mimics the interface of the Web service. Depending on the switches, this stub code can become very large and include all the referenced data types as inner classes. A better option is to let the generator create a couple of classes in different packages that will be the basis for javadoc generation later on. All the resulting classes are Java source code that you can study. Nevertheless, it is generated code, and as such it has its own "look."

For functional and performance testing it might be necessary to modify the client stub. This is especially true when it comes to using HTTPS, instead of HTTP, to control the client-side debug level via a parameter (instead of using an XML config file), or to deal with HTTP chunking. (HTTP 1.1 supports chunked encoding, which allows HTTP messages to be broken up into several parts. Chunking is most often used by the server for responses, but clients can also chunk large requests.)

Particularly during testing, you may decide to just say "OK" to all self-signed SSL certificates. Therefore, when using HTTPS, you could need a specific SSL Socket Factory that allows for accepting all certificates in test mode. For performance tests, you might even want to control the number of opened connections, and you will want to re-use the same connection for different calls coming from the same client. This article is accompanied by a Java 5 parser and an Axis2 client stub modifier to deal with such scenarios.

Because you will get the parser/modifier as source code, you can customize it to "inject" some code for features not covered by my solution. What's more, you can use the whole code as a blueprint to write your own modifier for frameworks other than Axis2.

Gant targets for WSDL processing

A Gant script -- build.gant -- is the basis for all the features of the Groovy and Gant Toolset. Every task a user can perform is expressed as a Gant target. Using the directory that contains build.gant as your working dir, you can get an overview of all the supported targets, together with a description, by typing gant or gant -T in your command shell. The gant command refers to the "default" target you can implement, the gant -T feature (t stands for "table of contents") comes for free as part of the Gant implementation.

Listing 1. Calling Gant from the command line

1: >gant

2:

3: USAGE:

4: gant available (checks your build.properties settings for available frameworks)

5: gant wsdls (prints all wsdl files with their target endpoints, together with wsdl file 'shortnames' to be used by other targets regex)

6: gant [-D "wsdl=<wsdl_shortname_regex>"] javagen (generate Axis2 based Java code from wsdl, compile, provide javadoc, and generate necessary jar/zip files)

7: gant [-D "wsdl=<wsdl_shortname_regex>"] check (check one or more wsdl files for compatibility with installed code generators & validator)

8: gant [-D "wsdl=<wsdl_shortname_regex>"] validate (validate one or more wsdls files using the CXF validator tool (if CXF is installed))

9: gant [-D collect] alljars (generates a directory with all Axis2 client jars, src/javadoc-ZIPs, and xsb resource files if xmlbeans is used)

10: gant -D "wsdl=<wsdl_shortname_regex>" [-D "replace=<old>,<new>"] ns2p (prints a namespace-to-package mapping for a wsdl file)

11:

12: Produced listings in your 'results' directory:

13: output-<tool>.txt & error-<tool>.txt with infos/errors/warnings for the code generation

14:

15:

The following commands are available as features of the Groovy and Gant Toolset:

All Gant targets are available as commands for the user and can be combined like so:

gant -D "wsdl=GlobalW.+" -D collect javagen alljars

Listing 2. Sample output from the 'gant wsdls' command

1: G:\JavaWorld\Groovy_Gant>gant wsdls

2: list all wsdls

3:

4: AWSECommerceService [amazon/webservice/AWSECommerceService/wsdl/AWSECommerceService.wsdl]

5: [<soap:address location="http://soap.amazon.com/onca/soap?Service=AWSECommerceService"/>]

6:

7: AddNumbers [handlers/webservice/svc-addNumber/wsdl/AddNumbers.wsdl]

8: [<soap:address location="http://localhost:9000/handlers/AddNumbersService/AddNumbersPort" />]

9:

10: Callback [callback/webservice/svc-callback/wsdl/Callback.wsdl]

11: [<soap:address location="http://localhost:9005/CallbackContext/CallbackPort"/>

12: <soap:address location="http://localhost:9000/SoapContext/SoapPort"/>]

13:

14: CurrencyConverter [public-services/webservice/svc-lookup/wsdl/CurrencyConverter.wsdl]

15: [<soap:address location="http://glkev.webs.innerhost.com/glkev_ws/Currencyws.asmx" />]

16:

17: GlobalWeather [public-services/webservice/svc-lookup/wsdl/GlobalWeather.wsdl]

18: [<soap:address location="http://www.webservicex.net/globalweather.asmx" />]

19:

20: HelloWorld [hello-world/webservice/svc-hello-world/HelloWorld.wsdl]

21: [<soap:address location="https://localhost:9001/SoapContext/SoapPort"/>]

22:

23: JMSGreeterService [jms-greeter/webservice/svc-greeter/wsdl/JMSGreeterService.wsdl]

24: []

25:

26: Logon [security/webservice/svc-logon/wsdl/Logon.wsdl]

27: [<soap:address location="http://localhost:4708/com/myCompany/myProject/logon/generated/interf/Logon"/>]

28:

29: YellowPages [public-services/webservice/svc-lookup/wsdl/YellowPages.wsdl]

30: [<soap:address location="http://ws.soatrader.com/delimiterbob.com/0.1/YellowPages"/>]

31:

32: G:\JavaWorld\Groovy_Gant>

33:

Gant configuration using build.properties

Like an Ant file, the Gant execution is configured by a property file called build.properties which resides in the same directory as build.gant, as shown in Listing 3.

Listing 3. Gant configuration using build.properties

1: # Property file to customize the Gant script for WSDL processing.

2:

3: # ------------------------------------------------------------------------------

4: # Tell Gant which WSDL checker you have installed

5: # ------------------------------------------------------------------------------

6: axis.available=yes

7: xfire.available=yes

8: cxf.available=yes

9: wsimport.available=yes

10:

11:

12: # ------------------------------------------------------------------------------

13: # Axis2 information

14: # ------------------------------------------------------------------------------

15: axis2.install.dir=./ThirdPartyTools/axis2-1.3

16: axis2.lib.dir=${axis2.install.dir}/lib 17: axis2.version=1.3

18:

19: # ------------------------------------------------------------------------------

20: # XFire information

21: # ------------------------------------------------------------------------------

22: xfire.install.dir=./ThirdPartyTools/xfire-1.2.6

23: xfire.lib.dir=${xfire.install.dir}/lib 24: xfire.version=1.2.6

25: ant.jar=./ThirdPartyTools/groovy-1.0/lib/ant-1.6.5.jar

26:

27: # ------------------------------------------------------------------------------

28: # CXF information

29: # ------------------------------------------------------------------------------

30: cxf.install.dir=./ThirdPartyTools/apache-cxf-2.0.2-incubator

31: cxf.lib.dir=${cxf.install.dir}/lib 32: cxf.version=2.0.2

33:

34: # ------------------------------------------------------------------------------

35: # Java6 information (only necessary if you are running Java5)

36: # ------------------------------------------------------------------------------

37: java6.lib.dir=./ThirdPartyTools/Java6/lib

38:

39: # ------------------------------------------------------------------------------

40: # Java parser information

41: # ------------------------------------------------------------------------------

42: java-parser.install.dir=./JavaParser

43:

44: # ------------------------------------------------------------------------------

45: # Code generation information

46: # ------------------------------------------------------------------------------

47:

48: wsdl.root.dir=./wsdl

49: stubs.package.prefix=com.mycompany.myproject_axis

50: # if 'namespace-to-package replacement' is enabled,

51: # we try to provide a suitable mapping for Axis2

52: # but - consider as an alternative - using a 'ns2p.properties' file per wsdl file

53: # with the 'gant ns2p' command output as a basis

54: ns2p.replace=no

55: ns2p.namespace=myproject.mycompany.com

56: ns2p.package=com.mycompany.myproject_axis

57:

58: # Axis2 data binding= adb|xmlbeans

59: axis.data.binding=adb

60: output.axis.file=./results/output-axis.txt

61: error.axis.file=./results/error-axis.txt

62: output.axis.codeGenerator=./GeneratedCode_axis

63:

64: output.xfire.file=./results/output-xfire.txt

65: error.xfire.file=./results/error-xfire.txt

66: output.xfire.codeGenerator=./GeneratedCode_xfire

67:

68: output.cxf.file=./results/output-cxf.txt

69: error.cxf.file=./results/error-cxf.txt

70: output.cxf.codeGenerator=./GeneratedCode_cxf

71:

72: output.wsimport.file=./results/output-wsimport.txt

73: error.wsimport.file=./results/error-wsimport.txt

74: output.wsimport.codeGenerator=./GeneratedCode_wsimport

75:

76: output.validator.file=./results/output-validator.txt

77: error.validator.file=./results/error-validator.txt

78:

79: generated.code.dir=./GeneratedCode

80: client.jar.dir=./ClientJarFiles

81:

82: # ------------------------------------------------------------------------------

83: # Javadoc information

84: # ------------------------------------------------------------------------------

85: javadoc.enabled=yes

86: javadoc.packageNames=com.*,tools.*,org.*,net.*

87: project.copyright=Copyright © 2007 - Mycompany.com

88:

89:

90: # ------------------------------------------------------------------------------

91: # Optional proxy information

92: # ------------------------------------------------------------------------------

93: proxy.enabled=no

94: proxy.host=myProxyHost

95: proxy.port=81

Expressing dependencies

With Gant you can express dependencies as you would do with Ant, and you can call all available Ant tasks that are provided by Groovy's ant-1.6.5jar (found in the <GROOVY_HOME>/lib directory). You do not have to create a Groovy AntBuilder for this purpose because Gant does it for you.

Looking at the build.gant script excerpts in Listing 4, you can see that a Gant script is really a Groovy script.

Listing 4. Gant script (excerpt from build.gant)

1: import org.apache.commons.lang.StringUtils

2:

3: ...

4: boolean wsdlRegexProvided = false

5: boolean wsdlRegexOK = false

6: def wsdlFilesMatchingRegexList = []

7:

8: def readProperties() { 9: Ant.property(file: 'build.properties')

10: def props = Ant.project.properties

11: return props;

12: }

13: def antProperty = readProperties()

14:

15: long startTime = System.currentTimeMillis()

16:

17: // Classpath for Java code generation tool

18: def java2wsdl_classpath = Ant.path { 19: fileset(dir: antProperty.'axis2.lib.dir') { 20: include(name: '*.jar')

21: }

22: }

23:

24: def generateJavaCode = { wsdlFile, javaSrcDir -> 25: def ns2pValues = ''

26: if (antProperty.'ns2p.replace'.equals("yes")) { 27: //TODO: consider replacing regex by XmlSlurper

28: def pattern = /^.*(?:targetNamespace=")([^"]+)"/

29: def matcher = pattern.matcher('') 30: def ns2pMap = [:]

31: new File(wsdlFile).eachLine { 32: matcher.reset(it)

33: while (matcher.find()) { 34: String namespace = matcher.group(1)

35: if (!ns2pMap.containsKey(namespace)) { 36: ns2pMap.put(namespace, (namespace-'http://').replace('/', '.').replaceAll(antProperty.'ns2p.namespace',antProperty.'ns2p.package')) 37: }

38: }

39: }

40: Iterator iter = ns2pMap.keySet().iterator();

41: StringBuilder sbuf = new StringBuilder(256)

42: while (iter.hasNext()) { 43: String key = iter.next();

44: String value = ns2pMap.get(key)

45: sbuf.append(key)

46: sbuf.append('=') 47: sbuf.append(value)

48: sbuf.append(',') 49: }

50: if (sbuf.length() > 0) { 51: ns2pValues = sbuf.replace(sbuf.length()-1, sbuf.length(), '').toString()

52: }

53: else { 54: println 'No namespace-to-package replacement possible: nothing matched'

55: }

56: }

57:

58: def wsdlFileDir = StringUtils.substringBeforeLast(wsdlFile, '/')

59: def stubPackageSuffix = (wsdlFile - wsdlFileDir - '/' - '.wsdl').toLowerCase() + '.soap.stubs'

60: def outputDir = javaSrcDir-'/src' // Axis2 will generate a /src dir for us

61: def outFile = new File("${antProperty.'error.axis.file'}") 62: outFile << wsdlFile + NL

63: Ant.java(classname: 'org.apache.axis2.wsdl.WSDL2Java',

64: classpath: java2wsdl_classpath,

65: fork: true,

66: output: "${antProperty.'output.axis.file'}", 67: error: "${antProperty.'error.axis.file'}", 68: append: "yes",

69: resultproperty: "taskResult_$wsdlFile") { 70: if (antProperty.'proxy.enabled'.equals("yes")) { 71: println "Using proxy ${antProperty.'proxy.host'}:${antProperty.'proxy.port'}" 72: jvmarg (value: "-Dhttp.proxyHost=${antProperty.'proxyhost'}") 73: jvmarg (value: "-Dhttp.proxyPort=${antProperty.'proxyport'}") 74: }

75: arg (value: '-uri')

76: arg (value: wsdlFile)

77: arg (value: '-d')

78: arg (value: "${antProperty.'axis.data.binding'}") 79: arg (value: '-o')

80: arg (value: outputDir)

81: arg (value: '-p')

82: arg (value: "${antProperty.'stubs.package.prefix'}.$stubPackageSuffix") 83: arg (value: '-u')

84: arg (value: '-s')

85: if (new File("$wsdlFileDir/ns2p.properties").exists()) { 86: println 'using provided namespace-to-package mapping per wsdl file'

87: arg (value: '-ns2p')

88: arg (value: "$wsdlFileDir/ns2p.properties")

89: }

90: else if (antProperty.'ns2p.replace'.equals("yes")) { 91: println 'using provided namespace-to-package mapping for ALL wsdl files that should be processed'

92: arg (value: '-ns2p')

93: arg (value: ns2pValues)

94: }

95: arg (value: '-t')

96: }

97: print "$wsdlFile "

98: if (Ant.project.properties."taskResult_$wsdlFile" != '0') { 99: println '... ERROR'

100: }

101: else { 102: println '... OK'

103: }

104: }

105:

Gant specialities

You'll note that Gant targets are Groovy closures. Inside closures you can define new closures and assign them to variables, but you are not allowed to declare a method. So, the following is allowed:

target ( ) {

def Y = { }

}

But this isn't:

target ( ) {

def Y ( ) { }

}

Gant has two other characteristic features. First, every target has a name and a description, for instance

target (alljars: 'generate a directory with all client jar/src/doc/res archives') {

depends(javagen)

println alljars_description

To access this description string, you can use the variable "<target-name>_description", which is created by Gant for your convenience.

Second, if you want to hand over a WSDL regular expression "property" specified in the command line to the build.gant script, you can use the -D "wsdl=<regex>" idiom. The syntax is similar to that used for Java VM command-line property setting: java -Dproperty=value, but on Windows you really need the space after "-D", because Gant uses the Apache Commons CLI library. You can access this property in your Gant script by using the following "try/catch" trick (demonstrated for the property wsdl) that is evaluated in the init target:

1: target (init: 'check wsdl regex match') { 2: try { 3: // referencing 'wsdl' in this try block checks for the existence of this variable

4: // if you call Gant with '>gant -D "wsdl=<wsdl_target'>" then the variable 'wsdl'

5: // will be created by Gant for you, otherwise the catch block will be executed

6: def wsdlRootDir = new File(antProperty.'wsdl.root.dir')

7: def wsdlList = []

8: boolean atLeastOneMatchingWsdlFileFound = false

9: wsdlRootDir.eachFileRecurse{ 10: if (it.isFile() && it.name.endsWith('.wsdl')) { 11: def wsdlFile = it.canonicalPath.replace('\\', '/') 12: def startIndex = wsdlFile.indexOf('/wsdl') + 1 13: def endIndex = wsdlFile.lastIndexOf('.') 14: def shortname = StringUtils.substringAfterLast(wsdlFile, '/')-'.wsdl'

15: if (shortname == wsdl) { 16: println "---> Provided regex matched '$shortname'"

17: wsdlFilesMatchingRegexList << wsdlFile

18: atLeastOneMatchingWsdlFileFound = true

19: return;

20: }

21: }

22: }

23: wsdlRegexProvided = true

24: if (atLeastOneMatchingWsdlFileFound) { 25: wsdlRegexOK = true

26: }

27: else { 28: println "\tWarning: No wsdl file found that matches regex pattern '$wsdl'!"

29: wsdlRegexOK = false

30: }

31: }

32: catch (Exception e) { 33: // no regex evaulation necessary

34: }

35: }

In build.gant Groovy's syntax is used to handle foreach loops, proxy usage, and regular expressions to narrow the WSDL processing to a few files only. The WSDL checker and validator, however, are a set of pure Groovy files that have the facade WsdlChecker.main () as a central entry point. Although Groovy comes with nice GStrings and a powerful "GDK" (Groovy Development Kit) it appears, that Jakarta Commons Lang StringUtils is still a valuable add-on.

Checking WSDL files -- a Groovy task

As I mentioned in the introduction, the Groovy and Gant Toolset should help testers and developers check WSDL files coming from different sources for compatibility with a variable set of Web service frameworks, including WSDL validation. Testing for compatibility, in this context, means: call every Web service framework's WSDL-to-Java code generator and check the resulting files for exceptions, errors, and warnings. If CXF is installed and enabled in the build.properties file, then you can run CXF's validator tool, too. All these tasks are performed by pure-Groovy classes. The design is simple: every framework's code generator and its output handling is mapped to a Groovy class. Each class provides two basic methods that are called by the WsdlChecker "controller" class:

def checkWsdl(wsdlURI) calls the corresponding code generator for a WSDL file.

boolean findErrorsOrWarnings() tells the "controller" if errors or warnings are found in the generator output files. If so, it prints them to the console.

In Java you would define an interface that your concrete (code generator strategy) classes would implement (you could also use an abstract class). In Groovy, however, you don't need to declare explicit interfaces -- though you could. Instead, you create a list of "checker" objects (depending on the Web service frameworks installed and enabled in build.properties) making use of Groovy closures, as shown in Listing 5.

Listing 5. Groovy closures in the WsdlChecker class (excerpt)

1: def wsdlcheck() { 2: def sortedList = wsdlList.sort()

3: for (wsdlLongName in sortedList) { 4: def wsdlURI = wsdlLongName.suffix

5: availableCheckers.each{ it.checkWsdl(wsdlURI) } 6: }

7: // print individual results and report overall result to Ant (errors only)

8: new File('wsdl_errors.txt').write(Boolean.toString(findWsdlErrors())) 9: }

10:

11: boolean findWsdlErrors() { 12: println()

13: boolean errorsFoundInAnyChecker = false

14: availableCheckers.each{ 15: errorsFoundInAnyChecker = errorsFoundInAnyChecker |

16: it.findErrorsOrWarnings()

17: }

18: return errorsFoundInAnyChecker

19: }

20:

A typical output in your shell would look like this:

1: Logon.wsdl... Axis2 check done.

2: Logon.wsdl... XFire check done.

3: Logon.wsdl... Java 6 wsimport check done.

4: Logon.wsdl... CXF check done.

5: Logon.wsdl... CXF validator check done.

6:

7: No Axis2 errors found

8:

9: No XFire errors found

10:

11: Java6 wsimport warnings found in:

12: security/webservice/svc-logon/wsdl/Logon.wsdl:

13: src-resolve: Cannot resolve the name 'impl_1:RuntimeException2' to a(n) 'type definition' component.

14:

15: No Java6 wsimport errors found

16:

17: No CXF errors found

18:

19: CXF Validation passed

Using the Groovy-Eclipse-Plugin, you can start this WsdlChecker.groovy program just like a "normal" Java program. The plugin will create a separate Groovy entry in your Eclipse environment's Run settings.

Running the WSDL checker from inside Ant

It is also possible to run the WsdlChecker as part of an Ant target. To see how you can incorporate the Groovy classes in an Ant script, look at the following Ant build.xml, which is part of the Groovy-WSDL-Checker Eclipse project:

Listing 6. Calling the Groovy WSDL checker from inside Ant

1: <project name="wsdl2java check" default="check-wsdls" basedir=".">

2: <taskdef resource="net/sf/antcontrib/antcontrib.properties"/>

3: <taskdef name="groovy"

4: classname="org.codehaus.groovy.ant.Groovy">

5: <classpath location="lib/groovy-all-1.0.jar" />

6: </taskdef>

7:

8: <target name="check-wsdls">

9: <groovy src="src/tools/webservices/wsdl/checker/WsdlChecker.groovy">

10: <classpath>

11: <pathelement location="bin-groovy"/>

12: <pathelement location="lib/commons-lang-2.3.jar"/>

13: </classpath>

14: </groovy>

15:

16: <loadfile property="wsdlErrorsFound"

17: srcfile="wsdl_errors.txt"></loadfile>

18: <if>istrue value="${wsdlErrorsFound}" /> 19: <then>

20: <echo message="we found errors in wsdl files" />

21: </then>

22: <else>

23: <echo message="NO errors found in wsdl files" />

24: </else>

25: </if>

26: </target>

27:

28: </project>

The Groovy checker is controlled by the same build.properties file as is build.gant. If you want to work with the Groovy classes in the Eclipse project in artefacts (instead of calling the checker via build.gant regex to control which WSDL file to check/validate, and the checker's mode parameter (that tells the tool whether to check for compatibility using the installed code generators or to validate with the CXF validator tool). Please note, that I could not set any values in Ant's property hashtable that would be available to Ant afterwards, because in Groovy you are working with a copy of the table. Therefore, I chose to deliver the results via Ant's loadfile task. Working with Ant's limited condition handling capabilities just isn't my cup of tea. That's why I decided to use ant-contrib with its if-then-else feature to demonstrate the checker's result evaluation in Listing 6.

Code generation and modification -- Gant and Java play together

So far, you have only seen the possibility of generating Java source code from WSDL files and checking the generated output using different Web service frameworks. I'll conclude my introduction to the Groovy and Gant Toolset with a more concrete example based on the generated code of Axis2. The corresponding Gant target in the Groovy and Gant Toolset is called javagen.

To make working with the client stub more comfortable, I'll show you how to do much more than generate code with Axis2's wsdl2Java tool You will first generate source code, but then you will modify it with a Java parser/modifier. After that you will compile it using Sun's javac (so your JAVA_HOME environment variable should point to the JDK rather that the JRE root directory), generate javadoc information, and finally produce a jar file containing the compiled code. If you're using xmlbeans you will also get an xsb resources jar file to be included in your project's classpath.

This jar file -- together with the parser/modifier bytecode delivered as japa.jar -- is the basis for client-side unit or performance tests. Code modification allows you to provide the user with a handy client stub factory that incorporates, and encapsulates, Log4J debug-level setting, HTTP/HTTPS handling (including chunking and adaption to proper cipher suite exchange), and connection control (for the underlying Jakarta httpclient).

About JavaCC

It took me some time to find a suitable Java parser. I wanted one that would be capable of handling Java 5 syntax, that was free, and that would let me plug in my source code modification feature. I chose JavaCC. You can also get the parser source, together with my modifications/add-ons, from the Resources section: see JavaParser Eclipse project.

I extended two of the original files: JavaParser.java and DumpVisitor.java. The first one provides the entry point for Groovy as a main method and calls the DumpVisitor for the Axis2-generated client stub. As you may guess, the latter is based on the Gang of Four Visitor pattern. (You may have to look closely in order to spot the changes I made to reach my targets, however. Anyone who has used the Visitor pattern knows that its usage is not easily digested!)

Additionally, I provide a package, tools.webservices.wsdl.stubutil, that incorporates all the code that is required by the modified client stub to compile and work properly. You can generate all of this as a jar file; just use the japa.jardesc file, which is part of the JavaParser Eclipse project for your (and my) convenience.

For those who want to count on Java 6 Web service support based on java.net.URL, I have included a special HttpSSLSocketFactory.java class as part of the Eclipse JavaParser project that takes care of the cipher suite handling ("accept all certificates"). You can use an instance of this class to set the default SSLSocketFactory calling, for example, javax.net.ssl.HttpsURLConnection.setDefaultSSLSocketFactory. Socket factories are used when creating sockets for secure HTTPS URL connections. Creating a client stub typically looks like this:

GlobalWeather stub = GlobalWeatherStub.createStub(ProtocolType.http, Level.INFO, Chunking.yes,

"http://www.webservicex.net/globalweather.asmx", "Connection1");

Not-so-stupid bean property settings

My Gant script, build.gant, calls the Axis2 wsdl2java code generator with the flag -s (synchronous calls only) and the hint to use the default Axis2 data binding ADB (Axis2 Databinding Framework). This will produce service-method calls with properties wrapped as Java beans. Imagine you have a lot of properties that are optional for your call, and one of the required properties tells the Web service to ignore the others. With a normal RPC, you would set parameters to null (or with xmlbeans you can try the WSDL "nillable" attribute), but this is not possible with ADB. During serialization, every object property is tested to be not equal to null. Therefore, you might write many lines of source code doing nothing more than just setting a "default" value for every property of your bean before handing it over to your Axis2 stub. To overcome this "stupid work" I have written a smart Java utility class, NOB (NullObjectBean), that impressively demonstrates the power of using reflection and Jakarta commons -- the beanutils project in this case -- assuming that performance is not a requirement when you are setting your bean properties. Populating a bean with "default" values is now as easy as calling:

WebConferenceDTO webConferenceDTO = (WebConferenceDTO) NOB.create(new WebConferenceDTO());

As a current limitation, the NOB utility can only provide values for "beans" that have a public default (empty) constructor, that have public final constant objects, or that have "simple" interface types as properties. So the algorithm would not work, for instance, for the xmlbeans data binding where interfaces with factory methods are created. (But it could be extended to do so!) Nevertheless, having this class and the Groovy and Gant Toolset in place, nothing stops you from playing around with WSDL and the client-side part of Web services.

Listing 7. Helper class 'NOB' simplifies Axis2 ADB bean handling

1: package tools.webservices.wsdl.stubutil;

2:

3: import ...

4:

5: /**

6: * Provide a special kind of "Null Object Bean (NOB)" where every primitive and non primitive bean

7: * property has a default value not equal 'null'.

8: *

9: * Whereas the Null Object pattern would provide , we simply want to abstract the handling of Axis2

10: * ADB 'null' parameters away from the client.

11: * Because this class is used in the Axis2 environment with ADB as the default data binding, it

12: * checks if the bean that is handed over to our Factory method implements

13: * 'org.apache.axis2.databinding.ADBBean'. You can easily disable this check if you want to use this

14: * class in another context or replace the check if you need such a helper class for, e.g., XMLBeans

15: * data binding.

16: *

17: * @author Klaus-Peter Berg (Klaus P. Berg@web.de)

18: */

19: public class NOB { 20:

21: private static int recursionCounter = 0;

22:

23: private NOB() { 24: // hide constructor, we are a helper class with static methods only

25: }

26:

27: /**

28: * Create a Java bean object that can be used as a "Null Object Bean" in Axis2 parameters calls

29: * using ADB data binding.

30: * We do our best to process property types as interfaces and helper classes that provide constants, too,

31: * but abstract classes are ignored. So, every effort is made to create a useful "default" Null Object bean,

32: * but I cannot guarantee that Axis2.serialize() will be able to really handle it propertly ;-)

33: *

34: * @param emptyBean

35: * the bean that should be populated with non-null property values as a default

36: * @return the "populated Null Object Bean" to be casted to the bean type you will actually need

37: */

38: @SuppressWarnings("unchecked") 39: public static Object create(final Object emptyBean) { 40: recursionCounter++;

41: final boolean firstCall = recursionCounter == 1;

42: if (firstCall

43: && !org.apache.axis2.databinding.ADBBean.class.isAssignableFrom(emptyBean

44: .getClass())) { 45: throw new IllegalArgumentException(

46: "'emptyBean' argument must implement 'org.apache.axis2.databinding.ADBBean'");

47: }

48: final BeanMap beanMap = new BeanMap(emptyBean);

49: final Iterator<String> keyIterator = beanMap.keyIterator();

50: while (keyIterator.hasNext()) { 51: final String propertyName = keyIterator.next();

52: if (beanMap.get(propertyName) == null) { 53: final Class propertyType = beanMap.getType(propertyName);

54: try { 55: if (propertyType.isArray()) { 56: final Class<?> componentType = propertyType.getComponentType();

57: final Object propertyArray = Array.newInstance(componentType, 1);

58: beanMap.put(propertyName, propertyArray);

59: } else { 60: final Object propertyValue = ConstructorUtils.invokeConstructor(

61: propertyType, new Object[0]);

62: beanMap.put(propertyName, create(propertyValue));

63: }

64: } catch (final NoSuchMethodException e) { 65: if (propertyType.isInterface()) { 66: processInterfaceType(beanMap, propertyName, propertyType);

67: } else { 68: processHelperClassType(beanMap, propertyName, propertyType);

69: }

70: } catch (final IllegalAccessException e) { 71: throw new RuntimeException(e);

72: } catch (final InvocationTargetException e) { 73: throw new RuntimeException(e);

74: } catch (final InstantiationException e) { 75: processInterfaceType(beanMap, propertyName, propertyType);

76: }

77: }

78: }

79: recursionCounter--;

80: return beanMap.getBean();

81: }

82:

83: private static void processHelperClassType(final BeanMap beanMap, final String propertyName,

84: final Class propertyType) { 85: // Class.newInstance() will throw an InstantiationException

86: // if an attempt is made to create a new instance of the

87: // class and the zero-argument constructor is not visible.

88: // Therefore, we look for public final constants of the type we need,

89: // because we assume that we process a helper class with constants (only)...

90: final Field[] fields = propertyType.getDeclaredFields();

91: for (final Field field : fields) { 92: final int mod = field.getModifiers();

93: final boolean acceptField = Modifier.isPublic(mod) && Modifier.isStatic(mod)

94: && Modifier.isFinal(mod) == true && field.getType().equals(propertyType);

95: if (acceptField) { 96: try { 97: beanMap.put(propertyName, field.get(null));

98: break; // we will take the first constant that satifies our needs

99: } catch (final Exception e1) { 100: throw new RuntimeException(e1);

101: }

102: }

103: }

104: }

105:

106: private static void processInterfaceType(final BeanMap beanMap, final String propertyName,

107: final Class propertyType) { 108: if (propertyType.isInterface()) { 109: final Object interfaceToImplement = java.lang.reflect.Proxy.newProxyInstance(Thread

110: .currentThread().getContextClassLoader(), new Class[] { propertyType }, 111: new java.lang.reflect.InvocationHandler() { 112: public Object invoke(@SuppressWarnings("unused") 113: Object proxy, @SuppressWarnings("unused") 114: Method method, @SuppressWarnings("unused") 115: Object[] args)

116: throws Throwable { 117: return new Object();

118: }

119: });

120: beanMap.put(propertyName, interfaceToImplement);

121: } else if (Modifier.isAbstract(propertyType.getModifiers())) { 122: // ignore abstract class: we cannot create an instance of it ;-)

123: }

124: }

125: }

126: